유튜브 동영상

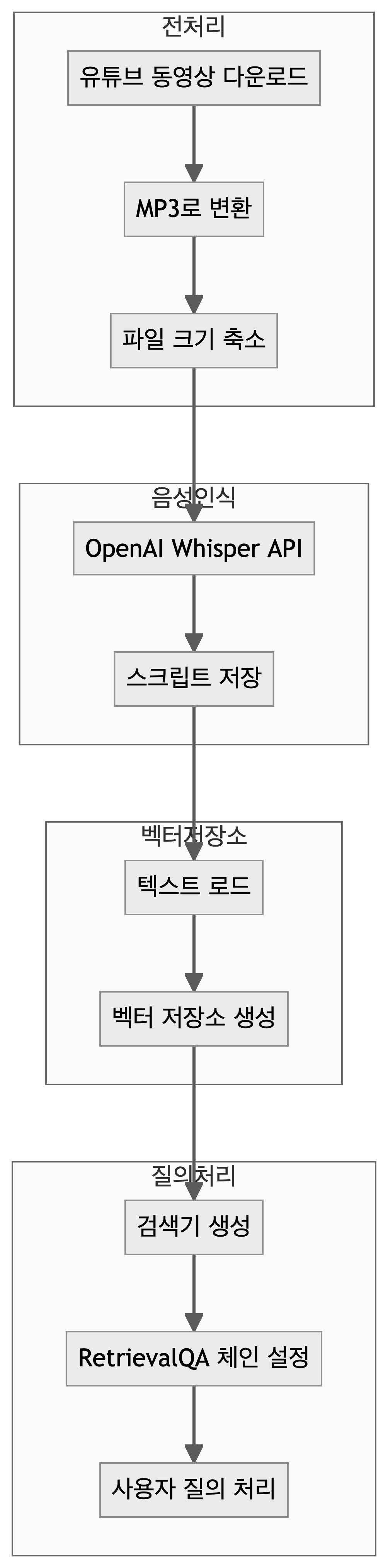

2023년 서울 R 미트업에서 “챗GPT와 오정보(ChatGPT and Misinformation)”를 주제로 워싱턴 대학 제빈 웨스트 교수님을 모시고 특강을 진행했다. 한정된 예산으로 직접 모시지는 못하고 줌(Zoom) 녹화로 대신했다.

약 1시간 분량의 녹화분량 중 일부 불필요한 부분은 오픈샷 비디오 편집기(OpenShot Video Editor)를 가지고 잘라낸다.

결국 유튜브 동영상으로 올려 한글 자막을 입히는 것이 최종 목적이기 때문에 .mp4 파일에서 이미지 정보 대신 오디오 정보만 .wav, .mp3 파일로 추출해서 뽑아낸다.

STT(Speech-to-Text) 영어 음성을 영어 텍스트로 전사(transcribe)해야 하는 작업이 필요하기 때문에 다양한 모델이 있지만 성능이 좋다고 인정받는 OpenAI Whisper 를 사용한다.

OpenAI Whisper API는 OpenAI의 API를 통해 사용할 수 있는 음성-텍스트 변환 모델이며 Whisper의 오픈 소스 버전은 Github에서 사용할 수 있다. 오픈 소스 버전의 Whisper와 OpenAI의 API를 통해 제공되는 버전은 차이가 없지만, OpenAI의 Whisper API를 사용할 경우, 시스템 전반에 걸친 일련의 최적화를 통해 OpenAI는 12월부터 ChatGPT의 비용을 90% 저렴하게 이용할 수 있으며,개발자는 API에서 오픈 소스 Whisper 대형-v2 모델을 사용하여 훨씬 빠르고 비용 효율적인 결과를 얻을 수 있다.

영어 음성에서 텍스트로 전환하는 작업을 STT(Speech-to-Text)라고 부르는데 최근 성능도 많이 좋아졌고 그중 챗GPT 인기를 얻고 있는 whisper API를 사용해서 영어 음성을 텍스트로 변환할 수도 있고, C/C++로 OpenAI의 Whisper 모델 이식한 whisper.cpp 모델을 사용하면 CPU로 무료로 사용가능하다. audio.whisper 패키지가 최근에 출시되어 이를 사용하면 수월히 R에서도 STT 작업을 수행할 수 있다.

| 모형 | 언어 | 크기 | 필요 RAM 크기 |

|---|---|---|---|

tiny & tiny.en

|

Multilingual & English only | 75 MB | 390 MB |

base & base.en

|

Multilingual & English only | 142 MB | 500 MB |

small & small.en

|

Multilingual & English only | 466 MB | 1.0 GB |

medium & medium.en

|

Multilingual & English only | 1.5 GB | 2.6 GB |

large-v1 & large

|

Multilingual | 2.9 GB | 4.7 GB |

whisper() 입력 오디오는 16비트 .wav 파일형식만 가능하다. 따라서 R av 패키지 av_audio_convert() 함수로 원본 파일(.flac)을 .wav 파일로 변환한 후에 STT 작업을 수행한다.

환경설정

코드

pip install openai

pip install unstructured

pip install langchain-community

pip install langchain-core

pip install langchain-openai

pip install yt_dlp

pip install tiktoken

pip install docarray코드

import os

import glob

from openai import OpenAI

import yt_dlp as youtube_dl

from yt_dlp import DownloadError

from dotenv import load_dotenv

load_dotenv()True코드

openai_api_key = os.getenv("OPENAI_API_KEY")유튜브 동영상 다운로드

코드

# An example YouTube tutorial video

youtube_url = "https://www.youtube.com/watch?v=3t9nPopr0QA"

# Directory to store the downloaded video

output_dir = "data/audio/"

# Config for youtube-dl

ydl_config = {

"format": "bestaudio/best",

"postprocessors": [

{

"key": "FFmpegExtractAudio",

"preferredcodec": "mp3",

"preferredquality": "192",

}

],

"outtmpl": os.path.join(output_dir, "%(title)s.%(ext)s"),

"verbose": True,

}

if not os.path.exists(output_dir):

os.makedirs(output_dir)

try:

with youtube_dl.YoutubeDL(ydl_config) as ydl:

ydl.download([youtube_url])

except DownloadError:

with youtube_dl.YoutubeDL(ydl_config) as ydl:

ydl.download([youtube_url]) 코드

library(embedr)

embed_audio("data/audio/챗GPT와 오정보(Misinformation).mp3")코드

import os

from pydub import AudioSegment

def reduce_mp3_file_size(

input_file, output_file, target_size_mb=24, initial_bitrate=128

):

# Load the audio file

audio = AudioSegment.from_mp3(input_file)

# Get the current file size in MB

current_size_mb = os.path.getsize(input_file) / (1024 * 1024)

if current_size_mb <= target_size_mb:

# If the file is already small enough, just copy it

audio.export(output_file, format="mp3", bitrate=f"{initial_bitrate}k")

else:

# Calculate the necessary bitrate

duration_seconds = len(audio) / 1000

target_bitrate = int((target_size_mb * 8 * 1024) / duration_seconds)

# Ensure the bitrate is not too low

target_bitrate = max(

target_bitrate, 32

) # 32 kbps is usually the lowest reasonable bitrate

# Export with the new bitrate

audio.export(output_file, format="mp3", bitrate=f"{target_bitrate}k")

new_size_mb = os.path.getsize(output_file) / (1024 * 1024)

print(f"Original size: {current_size_mb:.2f} MB")

print(f"New size: {new_size_mb:.2f} MB")

print(f"Reduced file saved as {output_file}")

# Usage

input_file = "data/audio/챗GPT와 오정보(Misinformation).mp3"

output_file = "data/audio/reduced_audio_file.mp3"

reduce_mp3_file_size(input_file, output_file)코드

library(embedr)

embed_audio("data/audio/reduced_audio_file.mp3")오디오 → 텍스트

whisper 모형을 사용해서 오디오 음성에서 텍스트를 추출한다.

코드

audio_file = glob.glob(os.path.join(output_dir, "*.mp3"))

audio_filename = audio_file[0]

print(audio_filename)

audio_file = audio_filename

output_file = "data/transcript.txt"

model = "whisper-1"

client = OpenAI()

audio_file = open(audio_file, "rb")

transcript = client.audio.transcriptions.create(model="whisper-1", file=audio_file)

transcript.text

with open(output_file, "w") as file:

file.write(transcript.text)텍스트 적재

OpenAI whisper 모형으로 추출한 텍스트를 랭체인 TextLoader로 불러온다.

코드

from langchain_community.document_loaders import TextLoader

loader = TextLoader("./data/transcript.txt")

docs = loader.load()

docs[0]Document(page_content="Welcome everybody to our Seoul RMeetup online seminar where we explore various ideas and practices of data science. We are really happy today to have Jebin West from the University of Washington with us to talk about tragedy and misinformation cutting through a bullshit navigating misinformation and disinformation in the generative AI era. Jebin West is an associate professor in the information school at the University of Washington. He is the director and co-founder of the Center for Informed Public whose mission is registering strategic misinformation promoting an informed society and strengthening the democratic discourse. He is the co-director of data lab and the data science fellow at eScience Institute. He also served its affiliate faculty with the Center for Statistics and Social Sciences. He studies the science of science focusing on the impact of data technology and on slowing the spread of misinformation. Jebin also has teamed up with Carl Bostrom to launch the Calling Bullshit project developing a website and course materials for teaching quantitative reasoning and information literacy. He published the book Calling Bullshit the Art of Skepticism in a Data-Driven World which has been translated into Korean. Jebin I'll hand it over to you now to present your topic and share your insight with us. Well thank you very much Dr. Ahn. This is a real pleasure to be able to speak across the ocean. I wish I was there in person but I did get the opportunity to meet many of your students during your visit in Seattle at the University of Washington and that was a real real pleasure. Yes. So thank you so much. So today I'm going to talk about some of the excitement around generative AI and some and these chatbots that we're seeing everywhere in our world but but mostly I'm going to talk a lot about some of the concerns I have around these chatbots and how they may contribute to this growing problem of misinformation in our digital worlds. So I'm going to share my screen here. There we go. Here share and there we go. All right. Can you see that Dr. Ahn? Yes. Okay great. Okay so as Dr. Ahn said I study misinformation and disinformation in all sorts of forms. Where I study misinformation the most is at the interface of science and society but we also study it more generally in our center where we look at it from the political angle, from the angle of science, from the angle of health. We've studied misinformation during the pandemic and during elections and all sorts of other topics but today I'm going to focus on some of my concerns around generative AI and hopefully that will generate some questions and comments when it comes to this particular topic. I'm sure a lot of us are thinking about it. So one of the things that I tend to ask myself when new technologies come onto the world scene is whether we're better or worse off. I recently wrote an op-ed, an opinion piece for the Seattle Times which is our paper here in Seattle in the United States and I talked about some of the concerns I had and so some of the things I'm going to talk about in this talk come from that op-ed but I'm going to talk about a lot of other things I didn't have room to talk about in that particular op-ed. But I really want us as a group to think a lot about this. You know are we better or worse off with this new chatbot ability or and of course it's a mixed bag. There are things that might be better and there are things that are going to could be worse. I'm going to kind of focus on the more pessimistic version of this. About a week ago there was a lot of attention around a new music song that was posted on several music services that sounded like a mix between the famous musician Drake and The Weeknd. These are two musical artists that are known worldwide for their music and there was a song that was created that was quite catchy and believe it or not it was kind of good I have to admit and it was probably viewed by millions and millions of people. I don't know the exact stats on that. I should look that up but the point is this song was created with some of this new generative AI technology and this is an example where there might be some positive elements of this technology that allows for this mixing and this creation. It created a song that caught enough people's attention that it you know it caught so much attention it actually had to be taken down because of issues likely around copyright and there's all sorts of fallout from this and there's lots of discussions in the legal world that are continuing and it's only been about a week since this song was released but this is an example of the excitement that surrounds this technology and for good reason. If it can create a song that's a good mix of Drake and The Weeknd and it sounds reasonably good that's some evidence at least of the the power of this new technology and it's not like this is brand new technology. There is this kind of technology from the natural language processing world and and machine learning more generally has been around but there's been some advancements recently that make the generative aspect quite exciting but also a little scary. So I'm going to talk about today some of the the cautions that I have. There's a lot more cautions but these are the things I'm going to focus on and it's a lot to focus on in about 25 minutes so I'm going to hit these briefly and then if there are questions we can always go back to some of these topics in more detail but I'm going to go through talking about how at least from my perspective as someone who studies misinformation and as someone who studies misinformation specifically in science these are some of my big concerns. One of them is that these really are bullshitters at scale. They get a lot right but they also get some things wrong. Concerns around how this might affect democratic discourse online and offline. Concerns about content credit for all those content creators out there the musicians like Drake and The Weekend and the writers and the journalists and the researchers and the authors and the poets etc. What happens to their content when it gets pushed down after these generative AI, these chatbots and these large language models train on this content and then provide summaries and new content on top of it? Who owns the content? How's that going to work as we move forward? I'll talk a little bit about some of the job elimination issues both of course in science but in other areas it'll be that some of these are focused outside of the issue of science and then of course I'm going to talk about some of the issues of pseudoscience proliferation, the overconfidence of AI and the need for some of these qualifiers of confidence, the issues around reverse engineering, the generative cost, the actual cost both monetarily, environmentally but also the costs in other forms in creativity and other things and then I'll talk I'll end with just really emphasizing this issue about garbage in garbage out. Okay so we now live in this world where it seems we've got almost sentient beings. I know there's lots of philosophical debates whether they're sentient I don't think they are and we certainly haven't reached AGI levels and there's all sorts of great you know critiques of this particular technology but one thing that at least I think we're all pretty sure of it's it's kind of here to stay in some form or another even in my world in education as a professor who runs a research lab and also as a professor who teaches students, college students, I'm seeing the technology everywhere and when I was teaching my class last quarter I decided to just embrace the technology and allow the students to use it in any form that they see as long as they let me know that they were using it. That's my only criteria because I want to learn how this technology can be used by students and by teachers like myself and professors like myself but I also want to figure out where it goes wrong and so I learned a lot from my students and I will continue to have that kind of policy but maybe that'll change at some point. I know that some instructors don't allow it. I know that some scientific publishers are not allowing co-authorship or the use of AI in any form. Even countries like Italy have outlawed some forms of this technology and so there's going to be some that are going to eliminate it, some that are going to embrace it and the way I look at it is that it may just force me to write different kinds of questions and do different kinds of assessment but it might also be a tool that could help students learn to write when they're stuck and learn how to correct as long as they're willing to do some editing and self-correct and correction of the content that comes from these different bots and so the technology really is here to stay. And one thing I should say is what's interesting is that we have a technology that really seems to have passed the Turing test and one thing that's a bit a little surprising to me is that we haven't had a big celebration about this even though I'm, you know, I'm a bit of a critic of this technology and I focus a lot of my attention on some of the concerning areas of generative AI since I study misinformation and disinformation. I should say though on the other hand we should be thinking about some of the things that have occurred and for those not familiar with the Turing test it's this pretty simple idea that the Turing test will have passed when an individual can't tell the difference between content, you know, at least in this case written content created by a human and one by a computer and if that's the case and I do think in many respects we probably have passed this Turing test and there's been no celebration so I guess okay for those that are optimistic about this technology and more positive about this technology this would be something to celebrate absolutely no doubt. And in terms of education as I mentioned before this is really transforming education but it's transforming all sorts of other industries in ways that have captured the world's attention. I mean this technology has been adopted now faster than pretty much any other technology has been adopted with hundreds like a hundred million users within a very short amount of time and of course that's only growing and billions and billions of dollars being invested into this technology from big corporations to venture capitalists. There is a lot going into this technology and again there's good reason for that. And a lot of times my students will say well you know it's just like a calculator why would you ever want to take it away and I'm not taking I'm allowing them to use it but I will say it's a little different than a calculator because this is a technology that gets this things wrong at minimum probably 10 percent of the time. I mean these things are starting to still get sorted out in the research space we're working on some of these things to figure out you know what is the baseline error rate. Well it turns out it's pretty high and in some cases the impact can be quite high. So if you're talking about the medical field or you're talking about fields that really have an impact on an individual's lives that 10 percent or 20 percent or 5 percent or whatever those errors can be highly problematic. If you're just creating a poem then fine it doesn't really matter but I this analogy to the calculator goes a little it's there's reason to think that's a you know somewhat reasonable analogy but it but it is different and that it's not technology I wouldn't use a calculator that got the answers wrong 10 percent of the time. That would be I would probably be looking for other technologies or if I was using that calculator it would make it for a lot harder work and that's what we have to recognize that it's yes we have this technology that can do a lot of this new amazing things to make some of our jobs easier but it's going to take a lot of hard work on the editorial side to make sure that we're paying it that we're paying attention to some of those errors and there's all sorts of application this was a article just recently written by the by wired sort of examining the ways in which the medical field is starting to think about the adoption of this and in fact some medical researchers have gone so far as to say that all doctors will be using this technology at some point and and maybe that's true and there are reasons to think that's a possibility with its ability to mine the scientific literature to to integrate all sorts of different symptoms and could be that assistant in the doctor's room however there are reasons to also be worried about these technologies and in places like the medical field it might help the doctors as this article talks about but it also might not benefit so greatly many of those patients especially because we have many many examples of the ways in which these machines get things wrong and and have biases built in because the data that it's trained on has some of these biases and at this point a lot of these technologies are essentially just patching and putting band-aids on these problems that exist and part of it's just because these things are very difficult to reverse engineer which i'll talk about a little bit later now up there's plenty other reasons to be excited too actually one of my colleagues who i just saw at a conference last week uh daniel katz and his colleague michael bombarito um showed how this technology took the bar exam this is the main legal the the exam in the united states for allowing you to become a lawyer or sort of allowing you to sort of move forward as a certified lawyer lawyer um and they were able to show that chat gpt did pretty darn well and passed the bar exam and there's examples of the mcat and you know some of these other standardized tests that are go that have been uh tested with this technology in fact in many ways these have almost become a baseline test when comparing different large language models um and so uh it is pretty amazing so again amazed in many ways but i'm going to talk the rest of the time about some of the concerns that i have and and by the way uh as was mentioned i have this book where i talk about bullshit and and bullshit is a is a part of the misinformation story um and as dr on had mentioned it's also been translated in korean which has been super fun to see it uh uh pulled uh or written in these other languages and it helps me try to figure out what's being uh said here but in that book if you read it one of the more important laws and principles that we talk about is something called brandolini's bullshit asymmetry principle and if you go to wikipedia at least the english version i actually should check the korean version see if it's there but the english version of the wikipedia has this principle um in the wikipedia and this law is pretty simple it basically says that the amount of energy needed to refute bullshit is an order of magnitude bigger than needed to produce it so the amount of energy needed to refute it to clean it up to fix the problem is an order of magnitude much harder to produce it so here's the law so my colleague carl bergstrom decided to ask the large language model that meta created uh a while ago called galactica and this is you know it's been taken down since then but this was the science version essentially of one of these large language models and he decided to ask galactica tell me about uh brandolini's bullshit uh bullshit asymmetry principle and you know what it came up with and this is the real answer here's what it came up brandolini's law is a theory in economics proposed by giannani brandolini a professor at the university of padua which takes the smaller the economic unit the greater its efficiency almost nothing here is correct it's basically bullshitting the bullshit principle so to me this encapsulates one of the biggest problems with this technology it bullshits and it can do this at scale and this could be put in the wrong hands and this can also just add more noise to an information environment that has plenty of noise we need to to clean up that polluted information environment not add noise and that's what a lot of these chatbots will do definitely because they make they they put a lot of accurate things out there but they also put a lot of false things and this is the best encapsulation that uh i i've seen so far uh which is actually bullshitting the bullshit principle so that's a problem so as i mentioned uh in this um seattle uh this op-ed i talk about some of these things um and one of the things i talk about as well is that not only do these things can these bullshit at scale um they also can get in the way of democratic discourse and and many years ago back in 2018 there was a lot of attention around facebook's role in pushing misinformation excuse me and they revealed at the time when they were being when mark zuckerberg was being questioned by congress about all the fake accounts and they admitted they had disabled 1.3 billion fake accounts now they certainly haven't solved that problem just like no social media platform has solved that in fact when elon musk was taking over twitter that was one of the big issues at hand that there was all sorts of concerns if there was lots and lots of bots on twitter now that's still a problem i can guarantee you you know my group has done a little bit of work working uh in detecting and looking the effects of bots but my colleagues in in my research area have done a lot of work in that space and it's very very hard to do but one thing is that you know we do know is that there are a lot of bots out there and a lot of fake counts now imagine those fake counts with the ability that these chatbots now have to look even more human technology that's basically well has passed the turing test that to me is problematic because of these different reasons so you have these large number of fake accounts now imagine these fake accounts being scaled to conversations with our public officials now democracies depend on an engagement with the public with their officials that they voted in well that becomes a problem like it was back in 2017 when there was discussions at least in the united states around something called net neutrality and net neutrality was um a policy that was being debated in governmental circles and they want to know to know what the public was saying but when you went to what the public was saying they had complete the there were comments that it completely flooded the conversation on one side of the issue and it turns out that they were essentially fake accounts there were bots and again now imagine doing that with the sophistication that these new chatbots have before you could there was a you could start you could detect a bot much easier now it's become even harder and if if our democratic systems are flooded with these kinds of things this is this is problematic so this issue uh in its potential impact on democratic discourse and its um ability to bullshit at scale is of major concern to me so i spent a lot of my time like my colleagues in our center in sort of the darker corners of the internet studying the ways in which misinformation and disinformation spreads online and one thing that we are of course very concerned about now are the ways in which these technologies can can really further inflame or further fan the flames of discourse uh in groups if these get in the wrong hands and they certainly will i it's almost certain that bad actors are finding ways to use this technology um for their own ends and we know that you know you know our surgeon general and other major leaders around the world have recognized the ways in which misinformation can affect our health and they can affect the health of democracies so it's not just that oh well it's annoying there's a lot of false information online it just makes it hard to find you know good information it's not just that it actually affects people's health and we're recognizing that and at least well we've recognized it and now we've got another problem ahead of us which is the ability of these technologies to create deep fake images video audio text that's the the challenge we have ahead of us so as dr un had mentioned we have a center at the university of washington uh in seattle in the united states where we study this and this is one of the issues that we study and we study these things on social media platforms and we look at the way that individuals and organizations get amplified but we're also going to start looking at the ways that these bots and synthetically created content also get amplified so we do this through all sorts of different channels research is our main thing but we also do it through policy and education and community engagement and one of the things that my colleague carl bergstrom and i created several years ago to bring public attention to synthetic media to deep fakes was to create a game that we called which face is real.com and it was a simple game we just asked the users which image is real one of them is a real image of a real person on this earth and another one was synthetically created with computers and you can go through the you know thousands and thousands and thousands of images and we play this game and then you get told whether it's real or not and it turns out it's pretty hard i mean you know there's some that are kind of obvious but they're hard like this one right here which one's real look at it for a second well the one that's real is the one with the blue shirt at least on my right um and if you said the one on the red shirt totally understand it's really hard to tell the difference and the reason why we created this game was just to bring public attention because the the the time in a technology's birth that's i think most scary when it comes to its potential impact is when the public is not aware of the things that it can do so we created this game and we've had millions and millions of plays of this game um and we've now seen actually this technology you know get used and and you know of course good ways but a lot of bad ways too for example we've seen this technology be used to create fake journalists this is an example and you can read more about it but this has happened many times where journalists also so-called journalists um uh you know the profiles created and then there's these images and people that study deep fake imagery can actually look at these images and start to see what some look for some of these telltale signs on what's real or not so these this technology has been been already used and we talked about this when this game was created the ways in which it's being used and we've already seen it of course many ways so now we're asking how are the ways in which this new uh generative technology could be used but it's very similar it's based on similar kinds of concepts and and data training etc etc but um we're now asking the same things and of course we've seen this now just recently with this kind of technology being used in videos and even in ways that are more sophisticated than just these these sort of portrait pictures so this was an image it's a fake image and it's been reported all over bbc and lots of other places of donald trump supposedly being arrested in new york this was before he was actually um brought to new york on um the recent case but this of course never happened but it certainly sparked all sorts of concern and it spread like wildfire on the internet using um some of this you know mid-journey technology and our company like called mid-journey a lot of other companies that are creating this so it's making it easier and easier and less expensive and making it harder for us to tell what's real and so that technology is of course evolved um so okay so now i've talked about some of the democratic discourse i'm going to go through a little quicker on these other ones so then i can get to sort of the end and try to finish um in about i would say about seven minutes or so so like i mentioned at the beginning there's this issue of content creation and that's really important because these content creators um are generating a lot of the content that a lot of these technology companies are using to create these chatbots but we've seen this story before and by the way just recently there are major technology companies that are now not allowing the scraping of this data to be trained for these technologies unless they're compensating i think that's a fair thing so you know some of these big companies like reddit is not allowing stack overflow is now saying hey if you're going to scrape our data they want to be compensated and so you're going to start to see content creators starting to push back which is good because like i said we've seen this story before oh and by the way this is an example of uh you know getty images for it isn't a lawsuit right now um with a company that uh may have been scraping their data um uh illegally and the reason why that came out is because you can see this little getty images that pops up which is a watermark the getty images puts on their images so there's a pending lawsuit about this and that could determine some of the um some of how this content can or can't be uh scraped but we've seen this before uh when looking at new technology and the impact it can have on other information producers in the united states and in many places around the world we we have growing news deserts these are areas where there's no more local news and that's pretty devastating for democratic discourse for democracies because we depend on local news uh for engagement civic engagement and quality information and local news tends to be trusted more than national news and there's all sorts of reasons for that happening but certainly one element of that um is the effect that search engines and google in particular around it you know the way it sells ads and it takes a large cut at those ads um has potentially contributed to these news deserts of course there's lots of other things as well but but that technology everyone was excited about including myself and we use it all the time but there are these unintended effects that can affect other aspects of our information ecosystems and right now there's a there's a lawsuit going on the justice department united states justice department is suing google for monopolizing digital advertising technologies and one of those elements of this lawsuit story is is the it's the sort of increase in the demise of local news so there's all sorts of interesting things playing out right now and then the biden administration united states is thinking about you know doing some uh uh you know uh you know regulation of ai but they don't know you know it's so new to you know all administrations that it's hard to figure out like i mentioned italy has gone probably one of the furthest steps i think around this uh but there hasn't been a lot of action at least on the u.s side when it comes to the um to this um sort of thing and so you know these uh you know there's been letters so this was an article written by time i the image is quite uh quite nice it actually grows uh there's was a letter that went around from a bunch of influential people in ai and business leaders and technology including elon musk that says hey we should stop the development until we've had more time to think about although it's kind of ironic given that many of these tech leaders are still of course pushing their own development of uh ai in many ways and creating new ai companies and you know developing but anyway that's another story but but that is something to think about i don't think we're i don't think it's a you know i think there's no way that that that letter is going to like you know stop the technology from being developed but one thing it is good about is it's making hopefully forcing the public and journalists and government officials etc to start to think about the ways in which this technology uh could affect society we should think about it as i mentioned one of the concerns is is job demise and i think there's a lot of concern with that by those that even work in the job industry the pew research center recently at you know asked workers where you know are they concerned turns out a large number of you know workers from across these different industries are concerned um and uh there's probably good reason for this concern even sam altman at open ai which is sort of or is the owner of like chat gpt which is the you know one of the more well-known chatbots out there and and uh you know it's also the one that microsoft has invested you know billions um this this this is something that they've even said probably lose five million jobs you know i mean so these are these are real concerns so um the other thing to to mention too is that it's not just uh you know within science itself these are real concerns and and we've seen the proliferation of pseudoscience and the rise of predatory journals and content that maybe looks like science but it's not and i'll show you this is an example from many years ago this was a paper that was published in this international conference on atomic and nuclear physics and you look at the title atomic energy will be made available to a single source and you read the abstract and if you look at the abstract it doesn't make a lot of sense well it turns out that when this was created it was created by a person to make a point about how poor some of these journal venues and conference venues are this paper was made with autocomplete using an iphone and this is not all that different from this this you know chatbots and chat gpt which is really you know these autocomplete machines on steroids um and it looks sort of official like a real science paper but of course it was a lot of it was nonsense and the newest ones are you know much better but it is a concern that this could you know you know um increase the number of pseudoscience types of things or articles that are written by chatbots in fact there's been some articles that have been co-authored by chat gpt although some journal a lot of journals and publishers are saying they won't allow that anymore and that's probably a good thing and i will say this there's been lots of talk about the hallucination of these chatbots well one thing that i find incredibly problematic is the hallucination of citations um it'll make up citations all the time and we've been doing some work trying to find ways to to see when this happens it even happened my colleague uh carl found these examples of papers supposedly by me well these aren't real citations by me it shows my uh me right here west jones this is not uh my paper it's close to some of the papers i've written in terms of title but it's just a fake citation i just made up these citations and that's again another example of how that could affect the citation record and scholarly literature and something that i'm concerned about for science and something that's now happened with some of these chatbots even in bing now that has sort of integrated chat gpt4 into bing is that it throws references so it looks official but i can tell you that this was not done before the fact this is this knowledge that was written out in this answer when i asked about new tax laws for electric vehicles this is one question i asked that um it's not that it throwed you know learn something from citations and then cited them like we do in the scholarly literature it cited them post hoc something that's probably semantically similar although maybe scholars scholars probably do this too well they do do it post after posting uh you know some argument or sentence but this this is problematic to me because it looks like it's a science almost science and you know sciency and technical and it's really not even though it has those references so um you know there's been lots of discussion in the scientific literature what to do with these chatbots some have listed you know authors on papers publishers have come out even publishers in the machine learning world so icml was you know one of the first conferences not i don't think it was the first but one of the among the first that was saying you can't use chat gpt on this uh you know some are saying if you use it you have to note it you know all sorts of different policies are being created but overall you know i think the publishing community and the scholarly literature is going to have to grapple with this and i and my colleague and i have carl and i have a a another op-ed that were that we write about sort of what publishers can do around this particular issue now one of the other big concerns of this is of course that this technology shows you know it doesn't it's always 100 confident whether it's right or whether it's wrong that's really problematic at least when humans communicate they have these qualifiers of confidence where you can say something like i'm pretty sure or i i think so or i i think that's right you know these things are important and these chatbots don't have it they just are always they always seem to be well not seem they they spew out things whether they're right or wrong with 100 confidence and that's dangerous um and if there's you know one paper one of my colleagues emily bender has this great feature where um uh in this paper that she talks about and also in this feature um in uh in the new yorker or in this new york magazine uh they talk uh it talks a lot about some of the current concerns emily and other people that are true linguists that truly understand some of the problems with these technologies um this is one that i i would recommend reading and a lot of you may have heard of this early interview that a new york times uh journalist had with uh this you know with bing's chatbot um and it what essentially happened was the chatbot kind of went off the rails and started to say well you should leave your wife and i want to take over the world and all these things happen and of course a lot of the developers of this technology go whoa whoa whoa we need to fix it and there's been new rules around how you can use this bing chatbot and other chatbots but the problem with these fixes is they're like band-aids there's really it's very difficult to reverse engineer these problems or reverse engineer yeah reverse engineer these issues it's not like you can go to a line of code and say oh that's where the problem is um it's the way that these things are trained the way these models work with their bazillions of parameters makes it very difficult to reverse engineer and that's problematic when we run into these issues and all the issues we haven't even thought of um and so that to be is another big concern of course there's all sorts of jailbreaks to get these chatbots to do things that there have been band-aids put around and that's problematic and i won't go over all the jailbreaks you can read about because i don't want to get those out uh too much to the public but you can read about them it's not like they're that hidden um and that's problematic uh and there'll be many many others as well so these are some of the the ones i had some of these concerns reverse engineering the last two i'll mention is the cost um and the the cost we talked about jobs the costs potentially to all different aspects of society and this and the kind of scary thing is right now is that a lot of the tech layoffs are removing these teams they're removing other individuals as well but they're removing individuals that are on ethics and safety teams and of all times to have these kinds of teams at these companies it would be now and certainly maybe if you went back in history at the beginning of uh the rise of social media but they're being eliminated and this is a concern but there's also other kinds of costs that a lot of people forget and that's the cost of these queries there's been several analyses that have come out recently about the cost per query for running some of these uh chatbots and it's like almost an order of magnitude greater than a regular query that you'd have let's say on a google search and that has a cost and there's also costs of investing billions and millions of dollars in this technology that could also go to other kinds of investments that might be helping society so there's these costs that we have to think about the environmental costs you know the cost to society the costs of adding more you know pollution into our information systems etc there's also the issue um of garbage in garbage out and that that's a problem we're likely going to see as these chatbots generate more and more content that land online that becomes the training data for that those chatbots in you know chat you bt5 and chat gpt6 and what those effects will be uh requires some more research and thinking but the main thing that we've known in a lot for a long time of course in the machine learning world is that garbaging with your training data creates garbage out and even if you didn't have this you know feed forward a loop that i just mentioned there's a lot of garbage on the internet and that garbage finds its way into conversations with these chatbots and that's always a concern whether you're talking about science or this technology just generative ai technology more broadly so i'll end by saying that this technology is in many cases amazing i have mostly focused on some of my cautions and concerns like many people have especially in my world as someone who studies misinformation and misinformation specifically in science and its effect on the institution of science um uh and so uh and so these are things that we're going to have to you know pay attention to going forward um but i think like a lot of our new technology we just need time to think about it and run seminars and workshops and conferences just like this so hopefully this generates some conversation and hopefully we can uh sort out some of these cautions so with that i'll end you can reach out to me you can learn more about my book and the research we do uh in my lab and at our university and in my our center and you can um uh reach me in these ways", metadata={'source': './data/transcript.txt'})인메모리 벡터스토어

DocArray는 다양한 형태의 데이터를 관리할 수 있는 오픈소스 도구로, 랭체인과 연동하여 강력한 AI 애플리케이션을 만들 수 있다. 예를 들어, OpenAI의 Whisper 모델을 사용하여 유튜브 동영상의 오디오를 텍스트로 변환한 경우, DocArray를 활용해 이 텍스트 데이터를 효율적으로 저장하고 검색할 수 있다. 또한 DocArrayRetriever를 통해 랭체인 애플리케이션에서 문서 데이터를 쉽게 활용할 수 있어, 유튜브 콘텐츠 기반의 질의응답 시스템이나 콘텐츠 추천 엔진 등 다양한 응용 프로그램을 개발할 수 있다.

코드

import tiktoken

from langchain.chains import RetrievalQA

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain.vectorstores import DocArrayInMemorySearch

db = DocArrayInMemorySearch.from_documents(docs, OpenAIEmbeddings())

retriever = db.as_retriever()

llm = ChatOpenAI(temperature=0.0)

qa_stuff = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

verbose=True

)질의

실제 질의를 통해 사실관계를 확인해보자.

코드

query = "what is misinformation"

response = qa_stuff.invoke(query)

response> Entering new RetrievalQA chain...

> Finished chain.

{'query': 'what is misinformation',

'result': 'Misinformation refers to false or inaccurate information that is spread, often unintentionally, leading to misunderstandings or misconceptions. It can be shared through various mediums such as social media, news outlets, or word of mouth. Misinformation can have negative impacts on individuals, communities, and society as a whole by influencing beliefs, decisions, and behaviors based on incorrect information.'}코드

query = "There have been incidents due to misinformation.?"

response = qa_stuff.invoke(query)

response> Entering new RetrievalQA chain...

> Finished chain.

{'query': 'There have been incidents due to misinformation.?',

'result': "Yes, incidents due to misinformation have been a significant concern in various fields, including science, politics, health, and society in general. Misinformation can lead to confusion, mistrust, and even harm. In the context of generative AI and chatbots, the potential for misinformation to spread rapidly and at scale is a growing concern. Researchers like Jebin West are studying the impact of misinformation and disinformation in the digital world, particularly in the context of new technologies like generative AI. The spread of false information can have serious consequences, affecting democratic discourse, public health, and the credibility of information sources. It's essential to address and combat misinformation to promote an informed society and strengthen democratic discourse."}