# LLM 성능

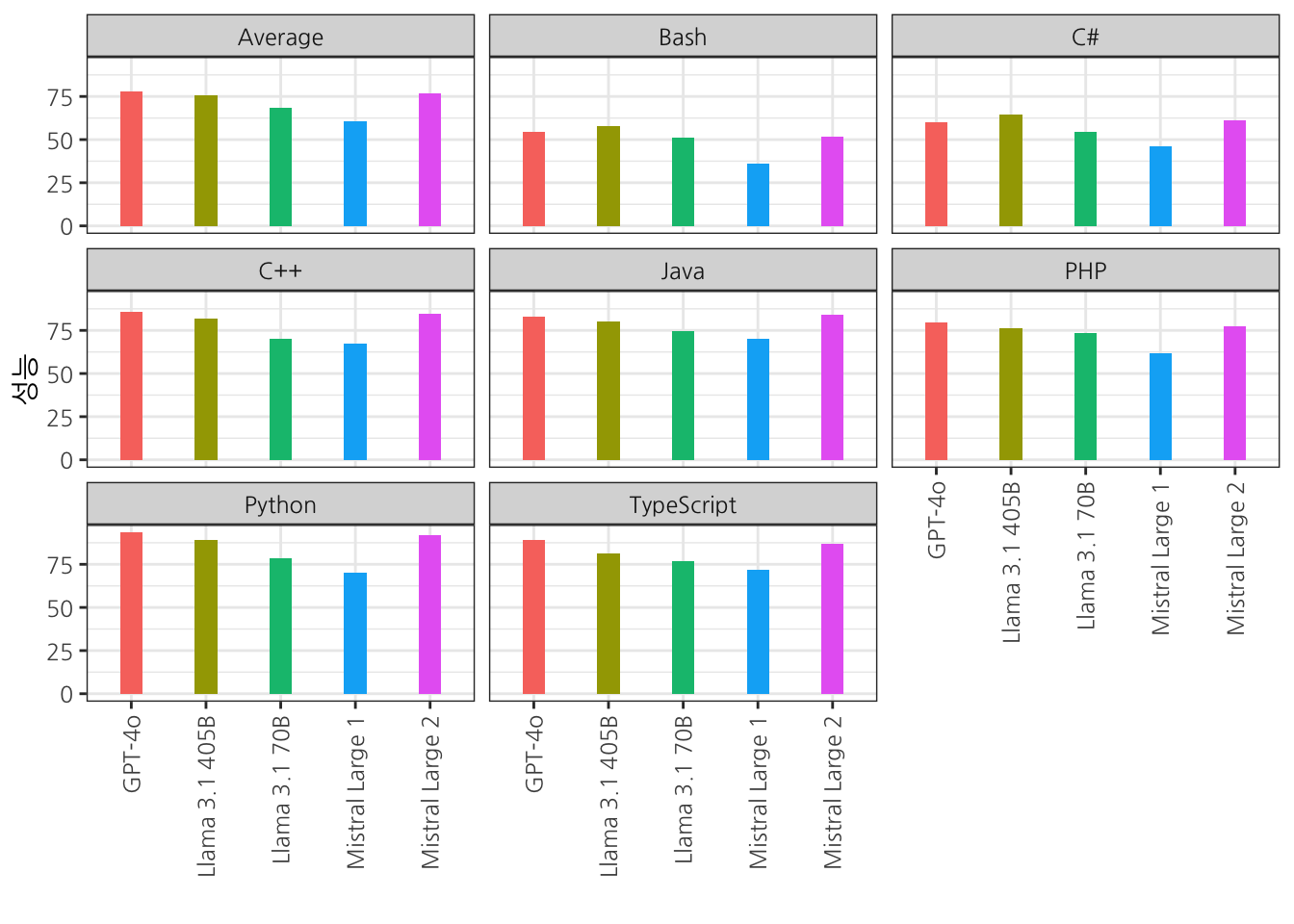

Mistral Large 2 성능[^mistral]은 다음과 같다.

[^mistral]: [Mistral Large 2](https://mistral.ai/news/mistral-large-2407/)

```{r}

library(tidyverse)

mistral <- tribble(

~Model, ~Average, ~Python, ~`C++`, ~Bash, ~Java, ~TypeScript, ~PHP, ~`C#`,

"Mistral Large 2", 76.9, 92.1, 84.5, 51.9, 84.2, 86.8, 77.6, 61.4,

"Mistral Large 1", 60.4, 70.1, 67.1, 36.1, 70.3, 71.7, 61.5, 46.2,

"Llama 3.1 405B", 75.8, 89.0, 82.0, 57.6, 80.4, 81.1, 76.4, 64.4,

"Llama 3.1 70B", 68.5, 78.7, 70.2, 51.3, 74.7, 76.7, 73.3, 54.4,

"GPT-4o", 77.9, 93.3, 85.7, 54.4, 82.9, 89.3, 79.5, 60.1

)

mistral |>

pivot_longer(cols = -Model, names_to = "언어", values_to = "성능") |>

ggplot(aes(x=Model, y=성능, fill = Model)) +

geom_col(width = 0.3, show.legend = FALSE) +

facet_wrap(~언어) +

labs(x = "",

y = "성능"

) +

theme_bw(base_family = "NanumGothic") +

theme(axis.text.x = element_text(angle = 90, hjust = 1, vjust = 0.5))

```

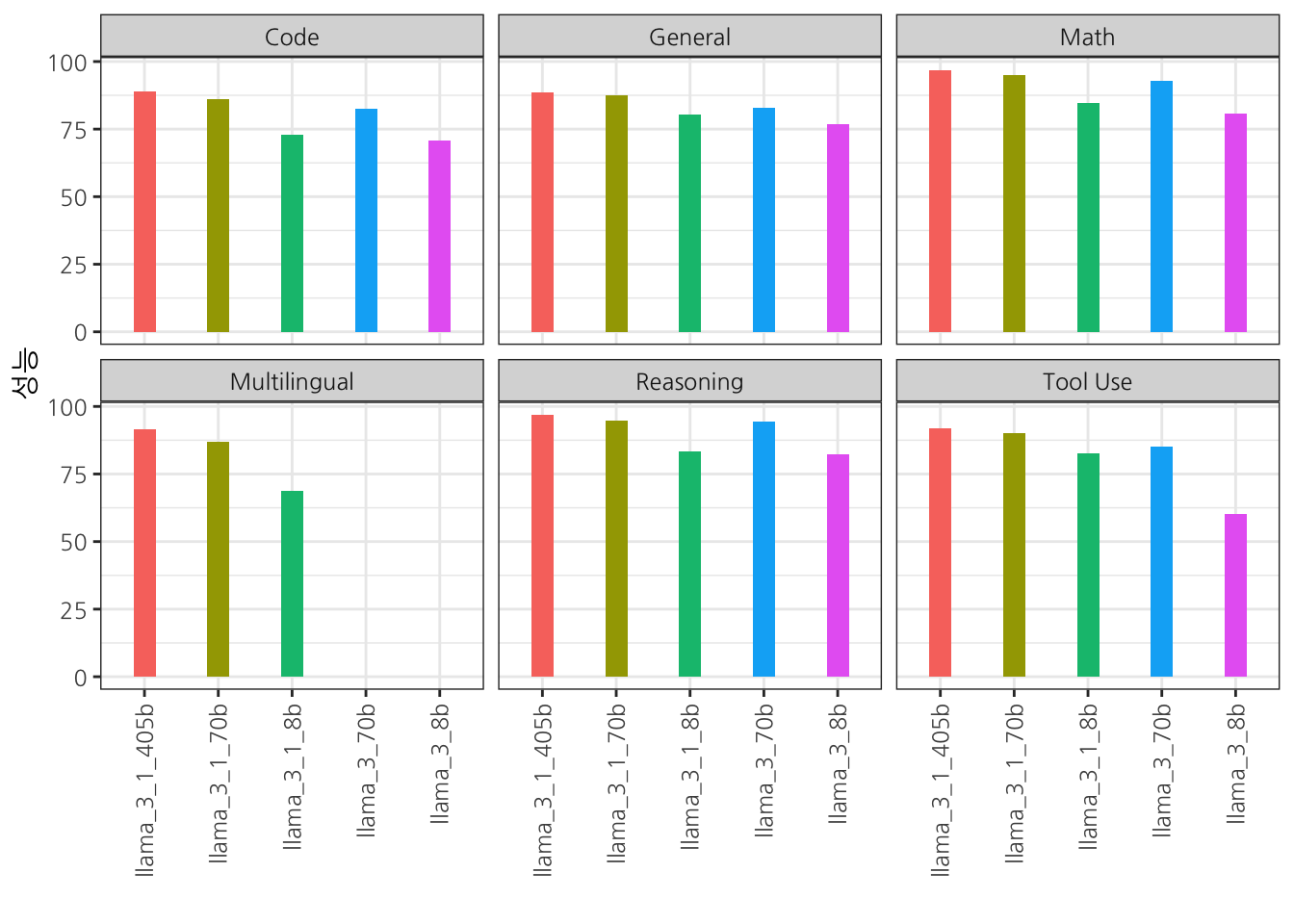

## 라마3.1

[라마3.1 모델 카드](https://github.com/meta-llama/llama-models/blob/main/models/llama3_1/MODEL_CARD.md)

```{r}

llama <- tribble(

~Category, ~Benchmark, ~Shots, ~Metric, ~`Llama 3 8B Instruct`, ~`Llama 3.1 8B Instruct`, ~`Llama 3 70B Instruct`, ~`Llama 3.1 70B Instruct`, ~`Llama 3.1 405B Instruct`,

"General", "MMLU", 5, "macro_avg/acc", 68.5, 69.4, 82.0, 83.6, 87.3,

"General", "MMLU (CoT)", 0, "macro_avg/acc", 65.3, 73.0, 80.9, 86.0, 88.6,

"General", "MMLU-Pro (CoT)", 5, "micro_avg/acc_char", 45.5, 48.3, 63.4, 66.4, 73.3,

"General", "IFEval", NA, NA, 76.8, 80.4, 82.9, 87.5, 88.6,

"Reasoning", "ARC-C", 0, "acc", 82.4, 83.4, 94.4, 94.8, 96.9,

"Reasoning", "GPQA", 0, "em", 34.6, 30.4, 39.5, 41.7, 50.7,

"Code", "HumanEval", 0, "pass@1", 60.4, 72.6, 81.7, 80.5, 89.0,

"Code", "MBPP ++ base version", 0, "pass@1", 70.6, 72.8, 82.5, 86.0, 88.6,

"Code", "Multipl-E HumanEval", 0, "pass@1", NA, 50.8, NA, 65.5, 75.2,

"Code", "Multipl-E MBPP", 0, "pass@1", NA, 52.4, NA, 62.0, 65.7,

"Math", "GSM-8K (CoT)", 8, "em_maj1@1", 80.6, 84.5, 93.0, 95.1, 96.8,

"Math", "MATH (CoT)", 0, "final_em", 29.1, 51.9, 51.0, 68.0, 73.8,

"Tool Use", "API-Bank", 0, "acc", 48.3, 82.6, 85.1, 90.0, 92.0,

"Tool Use", "BFCL", 0, "acc", 60.3, 76.1, 83.0, 84.8, 88.5,

"Tool Use", "Gorilla Benchmark API Bench", 0, "acc", 1.7, 8.2, 14.7, 29.7, 35.3,

"Tool Use", "Nexus (0-shot)", 0, "macro_avg/acc", 18.1, 38.5, 47.8, 56.7, 58.7,

"Multilingual", "Multilingual MGSM (CoT)", 0, "em", NA, 68.9, NA, 86.9, 91.6

)

llama |>

janitor::clean_names() |>

pivot_longer(cols = starts_with('llama'), names_to = 'model', values_to = 'value') |>

mutate(model = str_remove(model, "_instruct")) |>

ggplot(aes(x=model, y=value, fill = model)) +

geom_col(width=0.3, position = "dodge", show.legend = FALSE) +

facet_wrap(~category) +

labs(x = "",

y = "성능"

) +

theme_bw(base_family = "NanumGothic") +

theme(axis.text.x = element_text(angle = 90, hjust = 1, vjust = 0.5))

```